There are many important congressional races yet to be decided in the 2024 election, but the presidential race is in the books.

This was an unusual cycle in many respects, not least because the incumbent president withdrew from the race at the eleventh hour and the challenger survived an assassination attempt. But it was also unusual in that some major prediction markets began to list contracts referencing election outcomes, thus producing high-frequency forecasts that can now be compared with the outputs of more conventional statistical models.

Attention has (understandably) been focused on the headline markets predicting the winner of the presidency, but an enormous amount of data has also been generated regarding outcomes at the state and congressional district level, margins of victory in the popular vote and electoral college, and various permutations and combinations such as a sweep of all swing states. These events are all correlated, of course, but not perfectly so, and offer us an opportunity to evaluate the relative accuracy of models and markets.

There are some who feel that no further evaluation is necessary, that they can already proclaim with confidence that markets are “a hell of a lot more accurate than the polls and pundits.” This is premature to say the least. The question of relative accuracy cannot be answered by looking at one or two events, any more than it can be answered by logic alone.

Markets have some significant advantages over models—they can take on board novel sources of information, can deal with historically unprecedented situations, and can respond very rapidly to evolving events. But they also have potential shortcomings as forecasting mechanisms. They can come to be dominated by a few large traders whose views may be idiosyncratic, they are subject to overreaction and correction, and when they reference events whose objective likelihood of occurrence depends on subjective assessments of this probability, they create incentives for manipulation.

So to answer the question of relative accuracy, we need to look beyond the headline market and examine many other events for which models and markets were simultaneously generating forecasts.

To take just one example that complicates the picture a bit, consider the reaction of models and markets to a very surprising poll by J Ann Selzer that was released on November 2, showing Harris leading in Iowa. On Polymarket, the likelihood of Trump winning Iowa dropped quickly to 73 percent and stayed below 85 percent until the morning of the election:

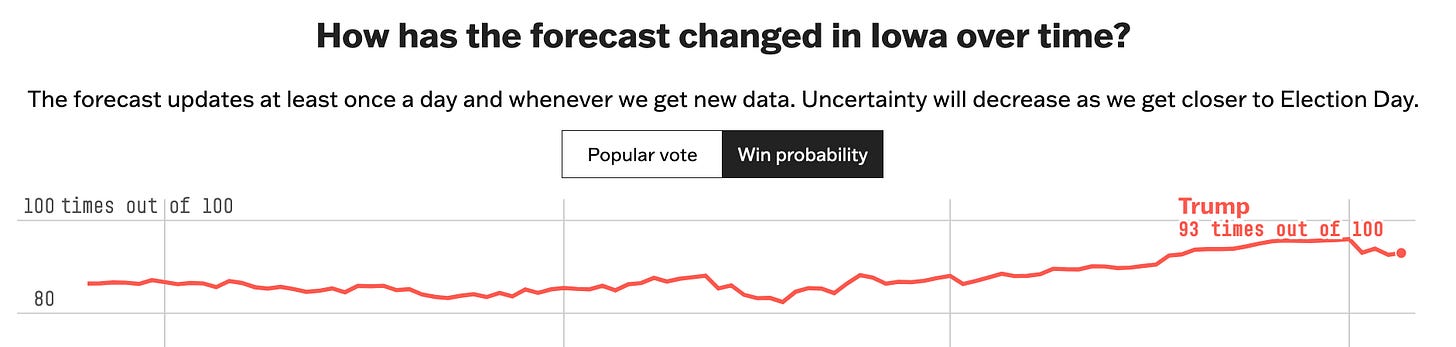

Meanwhile, the response to the poll on FiveThirtyEight was much more muted. At no point during the week before the election did the probability drop below 93 percent (the last vertical line in the figure below marks the start of November):

If one applies standard measures of performance to these forecasts, for instance using the average daily Brier score, it is the model that will come out ahead. Similarly, if one applies the profitability test, a virtual trader with beliefs inherited from the model would have bet quite heavily on Trump to win the state after the poll was released, and would have ended up making a profit.

This is not an isolated case. There are several congressional races, as well events related to the popular vote and a sweep of the swing states where some models would have come out ahead of markets. For instance, on the eve of the election, the likelihood that Harris would win the popular vote was 76 percent on Kalshi, 73 percent on Polymarket, and 71 percent on FiveThirtyEight. The differences here are not large, but again either accuracy test would have placed the model ahead.

The point is that one needs to sift through the numbers before reaching definitive conclusions about relative performance. The data will also allow us to compare markets with each other and make judgements about which designs work best. For instance, we might hope to gain some understanding of the effects of transaction observability, know-your-client requirements, participant eligibility, and limits on position size. All this will take time and effort, and until the work is done it’s probably best to reserve judgement.